What is the best way to read last lines (i.e. "tail") from a file using PHP?

Methods overview

Searching on the internet, I came across different solutions. I can group them in three approaches:

- naive ones that use

file()PHP function; - cheating ones that runs

tailcommand on the system; - mighty ones that happily jump around an opened file using

fseek().

I ended up choosing (or writing) five solutions, a naive one, a cheating one and three mighty ones.

- The most concise naive solution, using built-in array functions.

- The only possible solution based on

tailcommand, which has a little big problem: it does not run iftailis not available, i.e. on non-Unix (Windows) or on restricted environments that don't allow system functions. - The solution in which single bytes are read from the end of file searching for (and counting) new-line characters, found here.

- The multi-byte buffered solution optimized for large files, found here.

- A slightly modified version of solution #4 in which buffer length is dynamic, decided according to the number of lines to retrieve.

All solutions work. In the sense that they return the expected result from any file and for any number of lines we ask for (except for solution #1, that can break PHP memory limits in case of large files, returning nothing). But which one is better?

Performance tests

To answer the question I run tests. That's how these thing are done, isn't it?

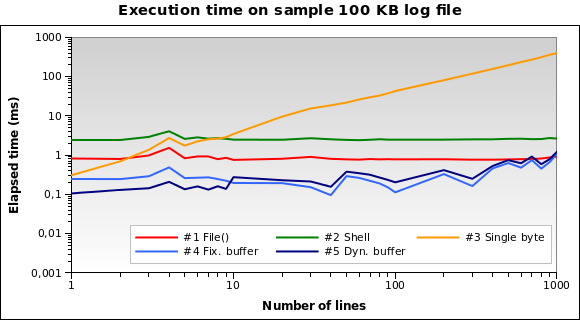

I prepared a sample 100 KB file joining together different files found in

my /var/log directory. Then I wrote a PHP script that uses each one of the

five solutions to retrieve 1, 2, .., 10, 20, ... 100, 200, ..., 1000 lines

from the end of the file. Each single test is repeated ten times (that's

something like 5 × 28 × 10 = 1400 tests), measuring average elapsed

time in microseconds.

I run the script on my local development machine (Xubuntu 12.04, PHP 5.3.10, 2.70 GHz dual core CPU, 2 GB RAM) using the PHP command line interpreter. Here are the results:

Solution #1 and #2 seem to be the worse ones. Solution #3 is good only when we need to read a few lines. Solutions #4 and #5 seem to be the best ones. Note how dynamic buffer size can optimize the algorithm: execution time is a little smaller for few lines, because of the reduced buffer.

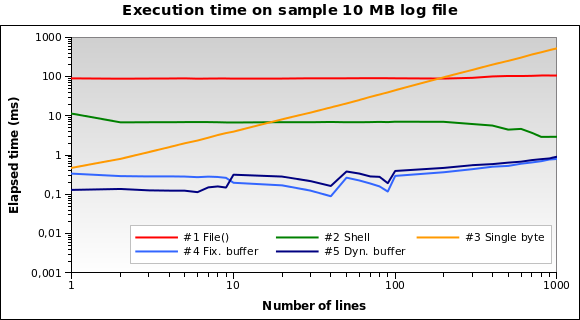

Let's try with a bigger file. What if we have to read a 10 MB log file?

Now solution #1 is by far the worse one: in fact, loading the whole 10 MB file into memory is not a great idea. I run the tests also on 1MB and 100MB file, and it's practically the same situation.

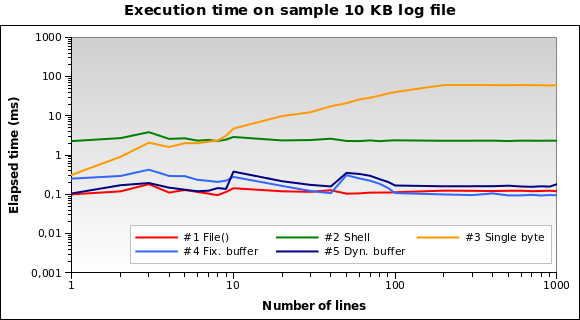

And for tiny log files? That's the graph for a 10 KB file:

Solution #1 is the best one now! Loading a 10 KB into memory isn't a big deal for PHP. Also #4 and #5 performs good. However this is an edge case: a 10 KB log means something like 150/200 lines...

You can download all my test files, sources and results here.

Final thoughts

Solution #5 is heavily recommended for the general use case: works great with every file size and performs particularly good when reading a few lines.

Avoid solution #1 if you should read files bigger than 10 KB.

Solution #2 and #3 aren't the best ones for each test I run: #2 never runs in less than 2ms, and #3 is heavily influenced by the number of lines you ask (works quite good only with 1 or 2 lines).

This is a modified version which can also skip last lines:

/**

* Modified version of http://www.geekality.net/2011/05/28/php-tail-tackling-large-files/ and of https://gist.github.com/lorenzos/1711e81a9162320fde20

* @author Kinga the Witch (Trans-dating.com), Torleif Berger, Lorenzo Stanco

* @link http://stackoverflow.com/a/15025877/995958

* @license http://creativecommons.org/licenses/by/3.0/

*/

function tailWithSkip($filepath, $lines = 1, $skip = 0, $adaptive = true)

{

// Open file

$f = @fopen($filepath, "rb");

if (@flock($f, LOCK_SH) === false) return false;

if ($f === false) return false;

if (!$adaptive) $buffer = 4096;

else {

// Sets buffer size, according to the number of lines to retrieve.

// This gives a performance boost when reading a few lines from the file.

$max=max($lines, $skip);

$buffer = ($max < 2 ? 64 : ($max < 10 ? 512 : 4096));

}

// Jump to last character

fseek($f, -1, SEEK_END);

// Read it and adjust line number if necessary

// (Otherwise the result would be wrong if file doesn't end with a blank line)

if (fread($f, 1) == "\n") {

if ($skip > 0) { $skip++; $lines--; }

} else {

$lines--;

}

// Start reading

$output = '';

$chunk = '';

// While we would like more

while (ftell($f) > 0 && $lines >= 0) {

// Figure out how far back we should jump

$seek = min(ftell($f), $buffer);

// Do the jump (backwards, relative to where we are)

fseek($f, -$seek, SEEK_CUR);

// Read a chunk

$chunk = fread($f, $seek);

// Calculate chunk parameters

$count = substr_count($chunk, "\n");

$strlen = mb_strlen($chunk, '8bit');

// Move the file pointer

fseek($f, -$strlen, SEEK_CUR);

if ($skip > 0) { // There are some lines to skip

if ($skip > $count) { $skip -= $count; $chunk=''; } // Chunk contains less new line symbols than

else {

$pos = 0;

while ($skip > 0) {

if ($pos > 0) $offset = $pos - $strlen - 1; // Calculate the offset - NEGATIVE position of last new line symbol

else $offset=0; // First search (without offset)

$pos = strrpos($chunk, "\n", $offset); // Search for last (including offset) new line symbol

if ($pos !== false) $skip--; // Found new line symbol - skip the line

else break; // "else break;" - Protection against infinite loop (just in case)

}

$chunk=substr($chunk, 0, $pos); // Truncated chunk

$count=substr_count($chunk, "\n"); // Count new line symbols in truncated chunk

}

}

if (strlen($chunk) > 0) {

// Add chunk to the output

$output = $chunk . $output;

// Decrease our line counter

$lines -= $count;

}

}

// While we have too many lines

// (Because of buffer size we might have read too many)

while ($lines++ < 0) {

// Find first newline and remove all text before that

$output = substr($output, strpos($output, "\n") + 1);

}

// Close file and return

@flock($f, LOCK_UN);

fclose($f);

return trim($output);

}

This would also work:

$file = new SplFileObject("/path/to/file");

$file->seek(PHP_INT_MAX); // cheap trick to seek to EoF

$total_lines = $file->key(); // last line number

// output the last twenty lines

$reader = new LimitIterator($file, $total_lines - 20);

foreach ($reader as $line) {

echo $line; // includes newlines

}

Or without the LimitIterator:

$file = new SplFileObject($filepath);

$file->seek(PHP_INT_MAX);

$total_lines = $file->key();

$file->seek($total_lines - 20);

while (!$file->eof()) {

echo $file->current();

$file->next();

}

Unfortunately, your testcase segfaults on my machine, so I cannot tell how it performs.